Distributed AV1 Video Encoding

Hi everyone,

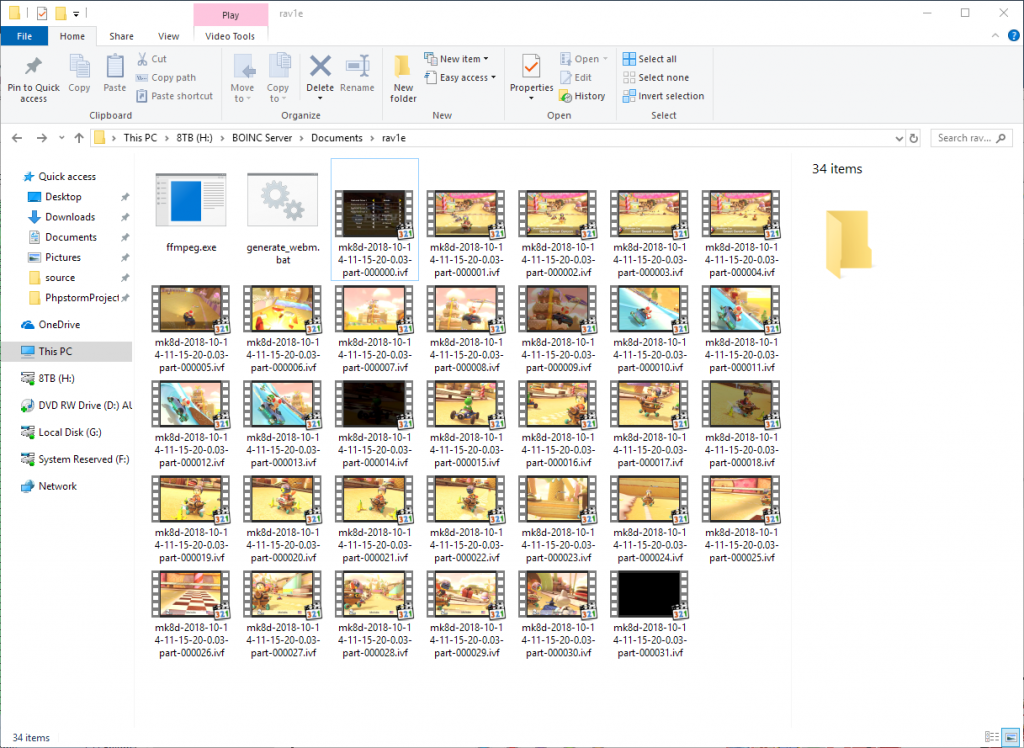

Yesterday, I began working on writing code to encode video files to AV1 in a distributed computing fashion using my private BOINC server. I wrote some PHP code and developed a Windows Forms software that will submit the video files to the distributed computing server so that it can send jobs to connected machines.

The encoding software I’m using is rav1e. This software is currently single-threaded, which means that encoding videos will take some time, especially when using the –speed 0 flag, which is the slowest mode available nut gives the best quality.

My solution at this time consists on splitting the video files into 1-second segments and then launching a rav1e encoding task for each part, each in one CPU thread. This significantly speeds up the encoding process because it can simultaneously encode several parts depending on the CPU being used. For example, if we split a video into a 1-second segment, and you have an AMD Ryzen 7 2700X CPU, this means that you can process 16 seconds at a time.

Preparing the input video file

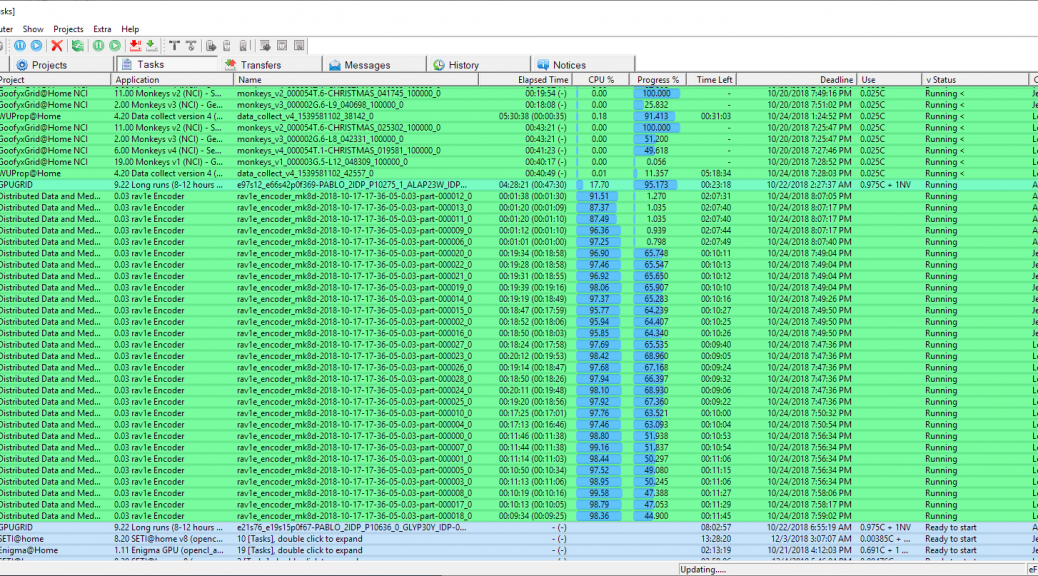

As I mentioned at the start, I wrote a Windows Forms software that will handle the video submission process. The software will first call ffmpeg to split the video into 1-second segments, extract the audio file and encode it to Opus using the desired bitrate, and then it will submit the 1-second segment video files to the BOINC server, generating a task for each file:

I’m submitting the video files using a quantizer setting of 175 and a speed of 0.

Once the tasks are generated, comes the processing.

AV1 encoding:

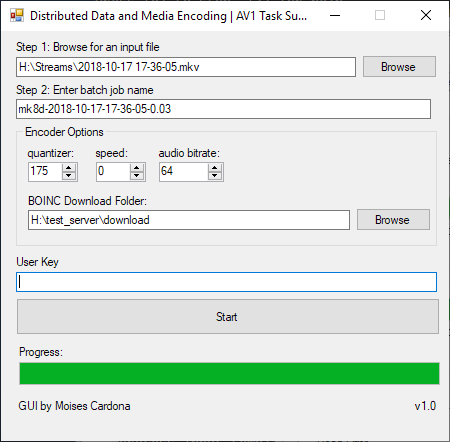

The BOINC client is the software responsible for contacting the server to retrieve the splitted files and initiate the tasks. It will download the rav1e executable from the BOINC server, the input video file, and will start the encoder. This process takes about 2 hours and 30 minutes on an AMD Ryzen 7 2700X CPU while for my Intel i7-3610QM and 4700MQ it took more than 3 hours. Still, the advantage is that I get to encode multiple segments at once, speeding the encoding of the source video significantly.

Here, you can see the encoding tasks from 4 of my machines:

Result files:

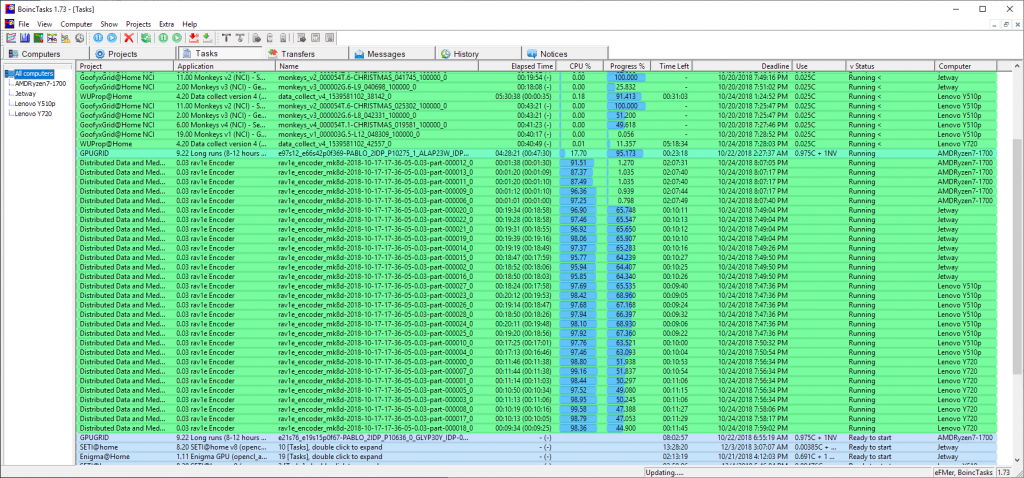

Once the encoding tasks are finished, they are sent back to the BOINC server which will mark the task as completed and will move the results to a defined folder:

Merging the files:

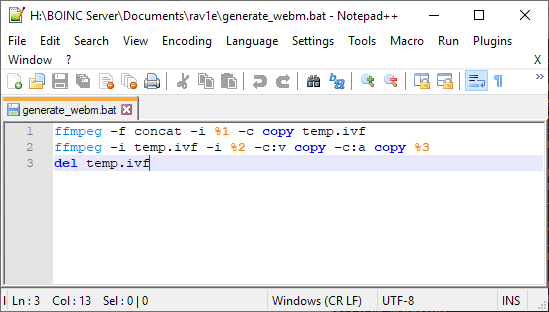

The final step is to merge the result files and also merge the audio file. For this, I wrote a simple bash script that calls ffmpeg’s concat function and then merges the concatenated file with the audio to generate one final .webm file. WebM is s container compatible with AV1 video files as well as Opus audio tracks:

The script takes 3 arguments. The first one is the list of files to concatenate, the second is the Opus audio file and the third and final one is the name for the output file.

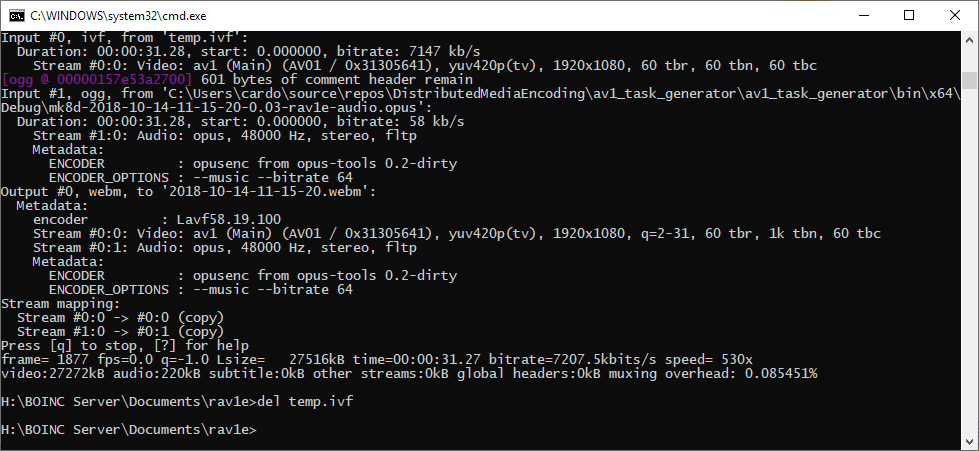

When we run it, we should see the following at the end:

The final file:

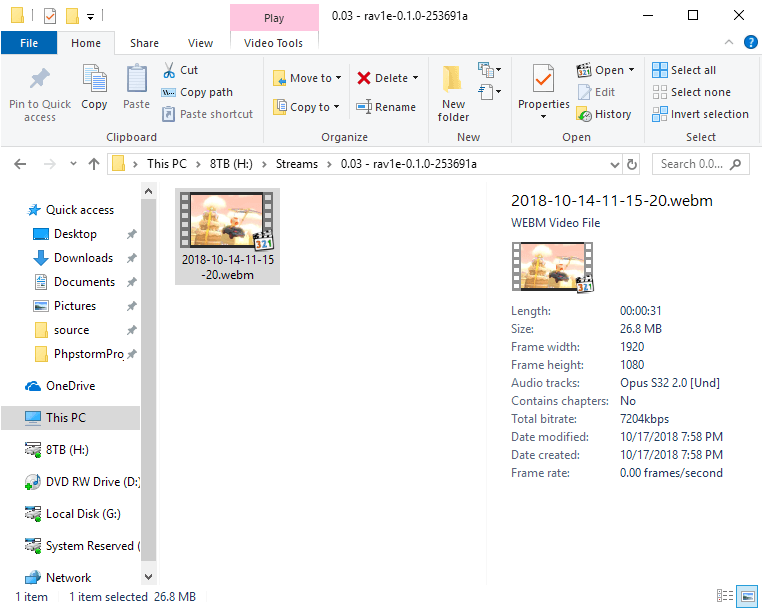

We can see the final encoded .webm file at the location we typed when we ran the above script:

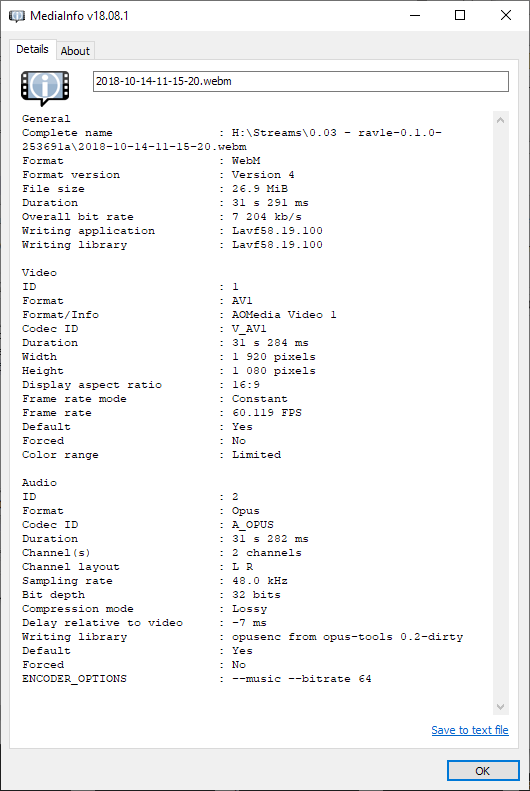

We can also check the file using MediaInfo:

And that’s it! BOINC is really useful in this case as I can encode multiple files and the server will handle all of the processing. The only manual part is where we run the merging script to generate the output file. However, it really speeds encoding by a lot. This is, until rav1e becomes multithreading, at which point, he BOINC server can still be used by changing the app to a multi-threading one.

Hope you enjoyed reading this post!